Focus on privacy, trust, and UX

Developer conferences have evolved a lot since their introduction. While they still target developers, conferences like Google I/O have branched out with content for product managers and other stakeholders as well. So if you want to learn about Google’s ecosystem, this conference is the place to be. You get an update on how they’re doing on all of their platforms, they show you the new features they developed and the old ones that will be removed. Plus, you get a sneak peek of what the future holds in store for those platforms.

Google’s most recent Input/Output event was held last week, and they had a truckload of information for us. We picked up on some of the big trends that could possibly impact our customer’s digital products.

Let’s have a look at the main focal points from an Android developer’s point of view.

Privacy

Privacy has always been a delicate topic. Lawsuits and the GDPR legislation have brought this topic to the foreground, and the emergence of COVID-19 contact tracer apps have highlighted the importance of privacy even more. During the conference, Google made it clear that they put in a lot of thought and effort across all their products so that the users’ privacy is respected.

They’ve improved upon existing features such as one-time permissions in Android, as well as removed a long-standing dubious requirement for location access when an app needed a Bluetooth connection. Features powered by machine learning such as Live Caption and Playing Now were always local, and they’ve taken this a step further with Private Compute Core. This is an isolated open source component of the Android OS that developers can use for features that operate on sensitive data. Developers can also use this API to provide an extra layer of security for their users.

Other initiatives include an improved password manager to move towards a password-free future, a privacy sandbox for web developers to operate on or save sensitive data such as gender, ethnicity, and religion.

Trust

We believe that trust is a fickle thing. Hard to gain, easy to lose. With practices like user tracking and data sharing practices, a lot of companies are struggling with this matter. Google acknowledges this and wants to regain the trust of its users. How? By being transparent about search results, sensor usage on phones, and open-sourcing its Private Compute Core API, which is designed to perform sensitive operations.

For example, the new feature “About this result” will provide users with the context of a search result. It’s designed to combat misinformation and its development was fueled by an enormous spike in “is it true that” search queries. It will help restore trust in news stories and lend credibility to news articles and search results.

Another example in the “trust area", is the new Android 12 feature that triggers indicators when one of the cameras or the microphone is in use. Lending a page from Apple’s playbook there. This is an important implementation to inform the user and prove Google’s trustworthiness. Although users already have access to tools that inform them about the permissions of apps, these will be even more fine-grained in Android 12. The user will also be able to block any app from accessing the camera or microphone with OS-level switches, similar to the ones for Wi-Fi or Bluetooth.

To help the developer improve their use of data, Google announced auditing tools such as AppOpsManager. If any personal data needs to be processed, developers will be able to use the Private Compute Core API to do so. By using that API they help protect the user’s data.

User Experience

Google knows how to please its users. New API’s, integrations, and models give developers and UX designers the chance to craft an excellent user experience.

By introducing new API’s and an updated launch mechanism, Android 12 will be a better performer and apps will be launched faster. The launch mechanism paves the way for better-performing launch animations with easier implementation via the new Jetpack library: Jetpack Compose. This means for Android what SwiftUI meant for iOS: a modern and dynamic way to create layouts.

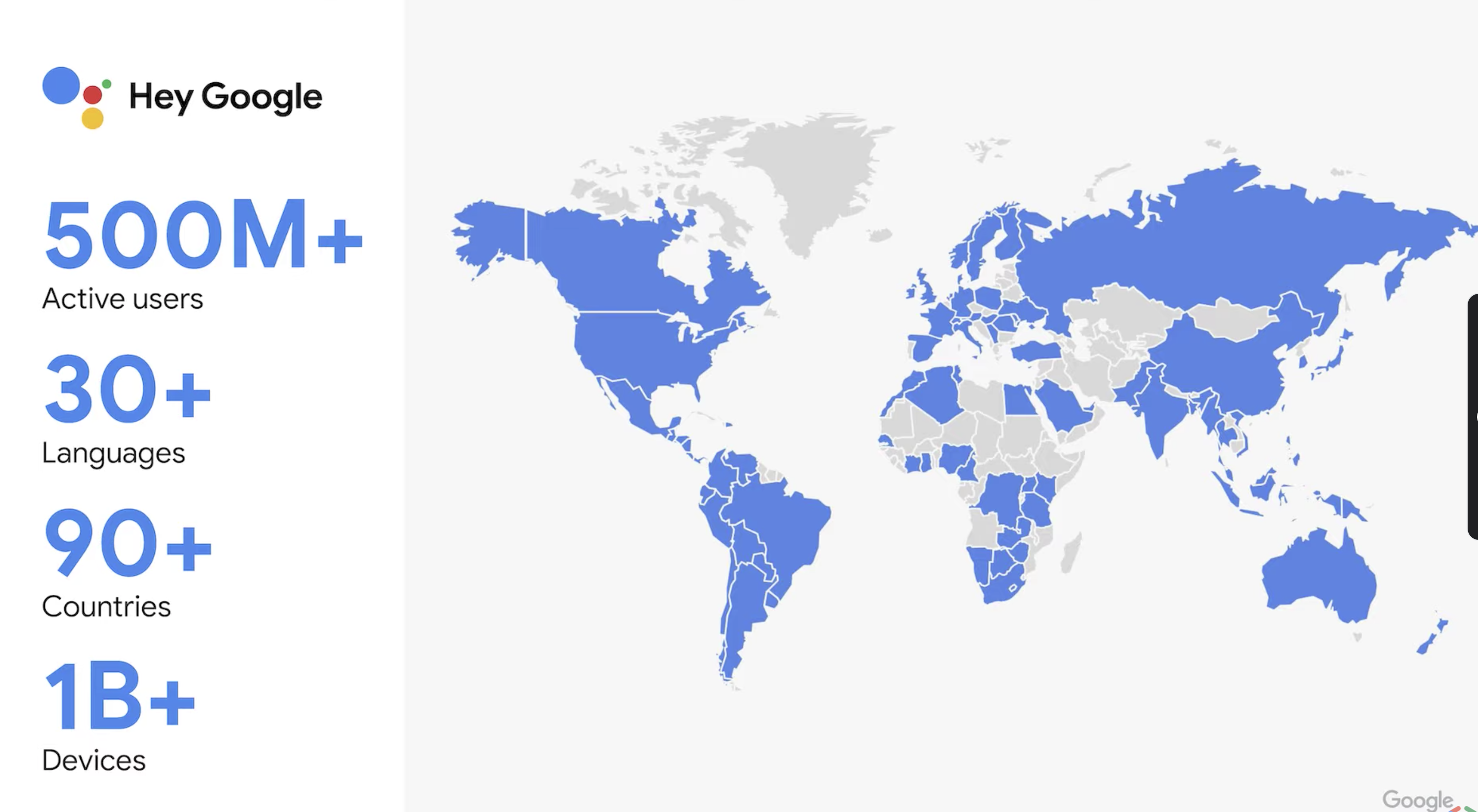

Integration has always been one of Android’s strong suits. Apps were always able to exchange any information, if the user allowed it, and if the receiving app supported the data format. Google will leverage this power with the introduction of the Capabilities API, vertical intents, voice improved actions, widget integration, and more for the Google assistant. Third-party apps will be able to surface their content for relevant search results, make that content actionable and the user should be able to navigate that content by using their voice as well.

Showcases and Moonshots

Google wouldn’t be Google if they didn’t reveal how teams across the world are using their products to shift the needle of what’s technically feasible. Between sessions, there were short videos of people using Google’s AI to improve the lives of their target audience.

For example, a robotics team worked with a young musician who lost his left forearm in a car accident. By pairing the power of AI and robotics, they helped him play the drums again. This is an exceptionally hard challenge since there can’t be any latency between the command and the execution of action of the prosthetic. If the latency is too big, the musician will be playing off-beat, or won’t be playing any music at all.

Another team was working on software that supports doctors during the triage of mammography. In the same vein, there was a project showcased that helps people get access to dermatologists for a diagnosis, people that normally wouldn’t have access to these professionals.

At the end of the keynote, Google unveiled Project Starline. A project they’ve been working on for a few years now, but has become much more relevant during the last 14 months of social isolation. Project Starline creates a 3D scan of the subject and can display it on a special screen, creating the illusion that your conversation partner is physically present. They are now looking for partners such as hospitals and long-care facilities, who -they expect- will see a big improvement for their patient’s mental welfare when used to communicate with loved ones.

A lot to think about, a lot to use. We’ll be taking these new features and integrations into account for our existing and new customers and partners. We're curious about your thoughts on Google I/O, feel free to share.